In this article we will walk through creating complete infrastructure pieces on Bluvalt OpenStack that are needed to have a fully provisioned Kubernetes cluster using Terraform and Rancher2. In addition to integration with cloud-provider-openstack.

Getting started with Infrastructure

- Create and login to a Linux VM, inside the created VM create an ssh key with this command

ssh-keygen. - Clone the repository terraform-rancher2.

- Install opnstack cli and configure it, use this instructions.

- Go into the openstack folder using

cd openstack/ - Modify the variables in

terraform.tfvarsto match your current cloud environment.

For RUH2 cloud, use:

- openstack_auth_url:

https://api-ruh2-vdc.bluvalt.com/identity/v3 - openstack_domain:

ruh2 - public_key:

Cat, copy, and past the generated public ssh key. (cat ./.ssh/id_rsa.pub)

For JED1 cloud, use:

- openstack_auth_url:

https://api-jed1-vdc.bluvalt.com/identity/v3 - openstack_domain:

jed1 - public_key:

Cat, copy, and past the generated public ssh key. (cat ./.ssh/id_rsa.pub)

It is important to uncomment the vars openstack_project , openstack_username and openstack_password or export them as env variables with prefix TF_VAR_*.

To export the credentials as env variables:

export TF_VAR_openstack_username=Your-username

export TF_VAR_openstack_password=Your-password

export TF_VAR_openstack_project=Your-project

Other variables can be obtained from openstack-cli such as rancher_node_image_id , external_network and flavors by invoking

Image list .. pick an ubuntu image:

openstack image list

Network name:

openstack network list --external

Flavors:

openstack flavor list

RKE configuration can be adjusted and customized in rancher2.tf, you can check the provider documentation at rancher_cluster.

It is really important to keep kubelet extra_args for the external cloudprovider in order to integrate with cloud-provider-openstack

Initialize Terraform

From your favorite command line run the following to initialize a working directory containing Terraform configuration files:

terraform init

Plan Terraform

To see the resources you are going to deploy, run the following:

terraform plan

If you are happy with the result plan, run the following command to apply the creation of the environment.

terraform apply --auto-approve

After all resources finish the creation the output will look something like this:

Apply complete! Resources: 25 added, 0 changed, 0 destroyed.

Outputs:

rancher_url = [

"https://xx.xx.xx.xx/",

]

Up to this point, use the rancher_url from above output and login to rancher instance.

- Username:

admin - Password:

admin123

Wait for all kubernetes nodes to be discovered, registered, and active.

Integration with openstack

To integrate the cloud provider we need some extra configuration. As you may notice, that all the nodes in rancher UI have a taint node.cloudprovider.kubernetes.io/uninitialized. The usage of --cloud-provider=external flag to the kubelet makes it waiting for the clouder-provider to start the initialization. This marks the node as needing a second initialization from an external controller before it can be scheduled work.

- Edit the file

manifests/cloud-configwith the access information to your openstack environment. - Login to your

demo-rancherserverhost using its Floting IP and the private ssh-key you generated in first step. - Install

kubectl:

- Update packages:

sudo apt update

- Download the required key:

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

- Add Kubernetes repository that containing kubectl:

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

- Update packages:

sudo apt update

- Install kubectl:

sudo apt-get install -y kubectl

Now, clone or copy the following files from terraform-rancher2 repo:

manifests/cloud-controller-manager-roles.yamlcloud-controller-manager-role-bindings.yamlmanifests/openstack-cloud-controller-manager-ds.yamlmanifests/cinder-csi-plugin.yaml

- Create a secret containing the openstack cloud configuration in the kube-system namespace.

kubectl create secret -n kube-system generic cloud-config --from-file=manifests/cloud.conf

- Create RBAC resources and openstack-cloud-controller-manager deamonset and wait for all the pods in kube-system namespace up and running.

kubectl apply -f manifests/cloud-controller-manager-roles.yaml

kubectl apply -f manifests/cloud-controller-manager-role-bindings.yaml

kubectl apply -f manifests/openstack-cloud-controller-manager-ds.yaml

- Create cinder-csi-plugin which are a set of cluster roles, cluster role bindings, statefulsets, and storageClass to communicate with openstack(cinder).

kubectl apply -f manifests/cinder-csi-plugin.yaml

Up to this point, openstack-cloud-controller-manager and cinder-csi-plugin have been deployed, and they’re able to obtain valuable information such as External IP addresses and Zone info.

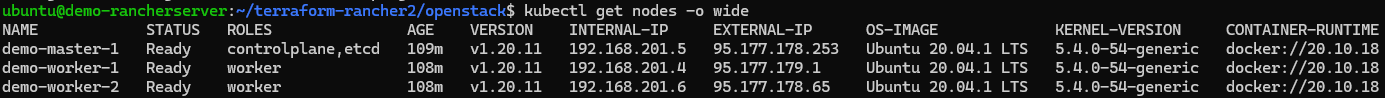

$ kubectl get nodes -o wide

The output:

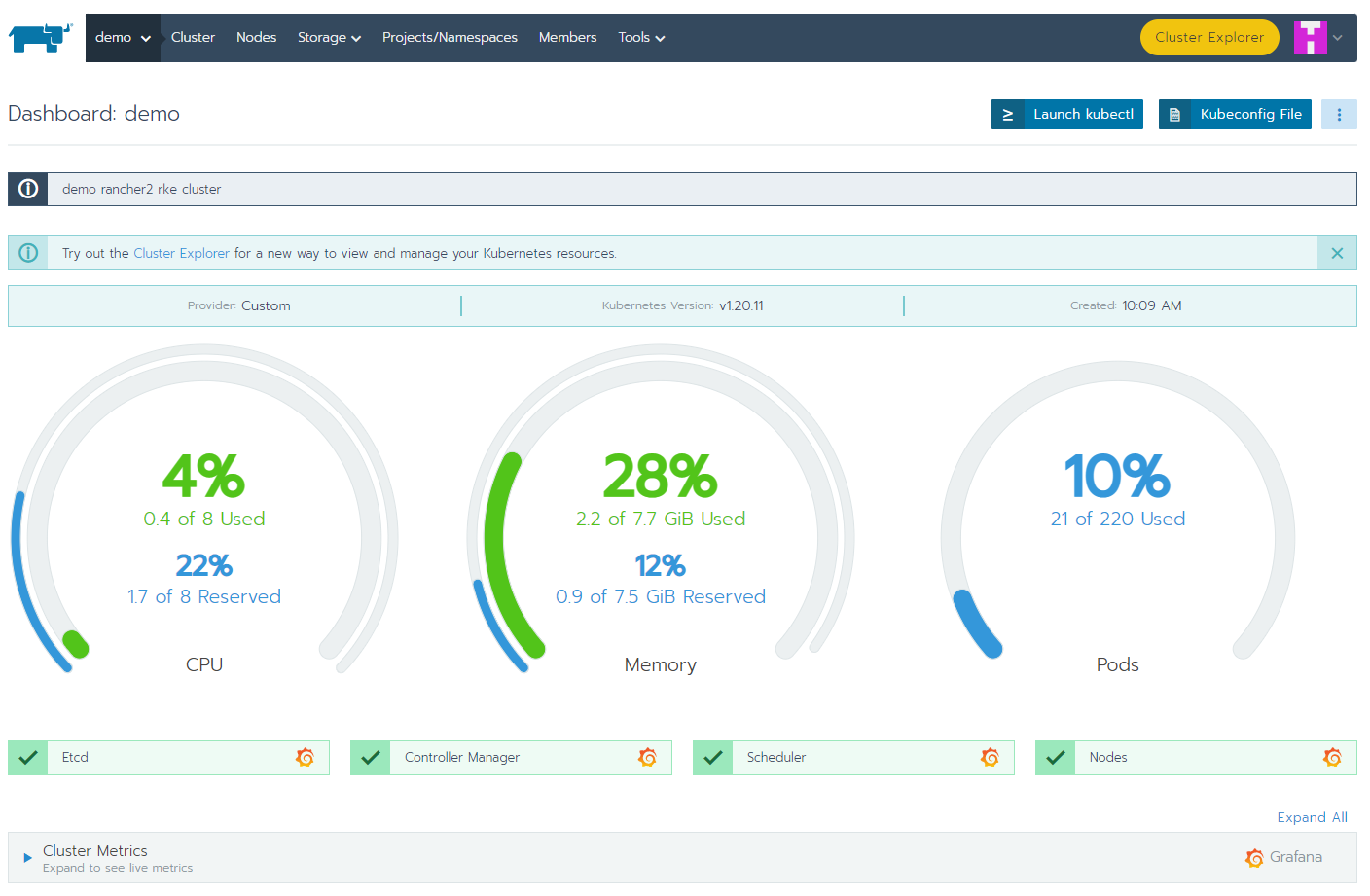

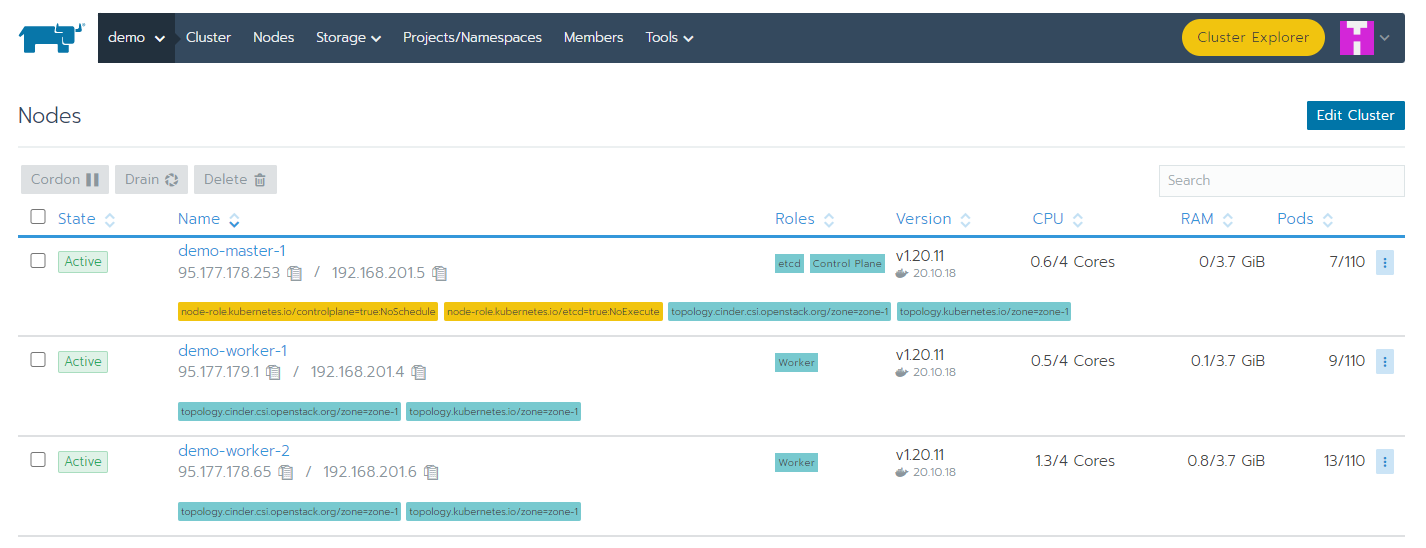

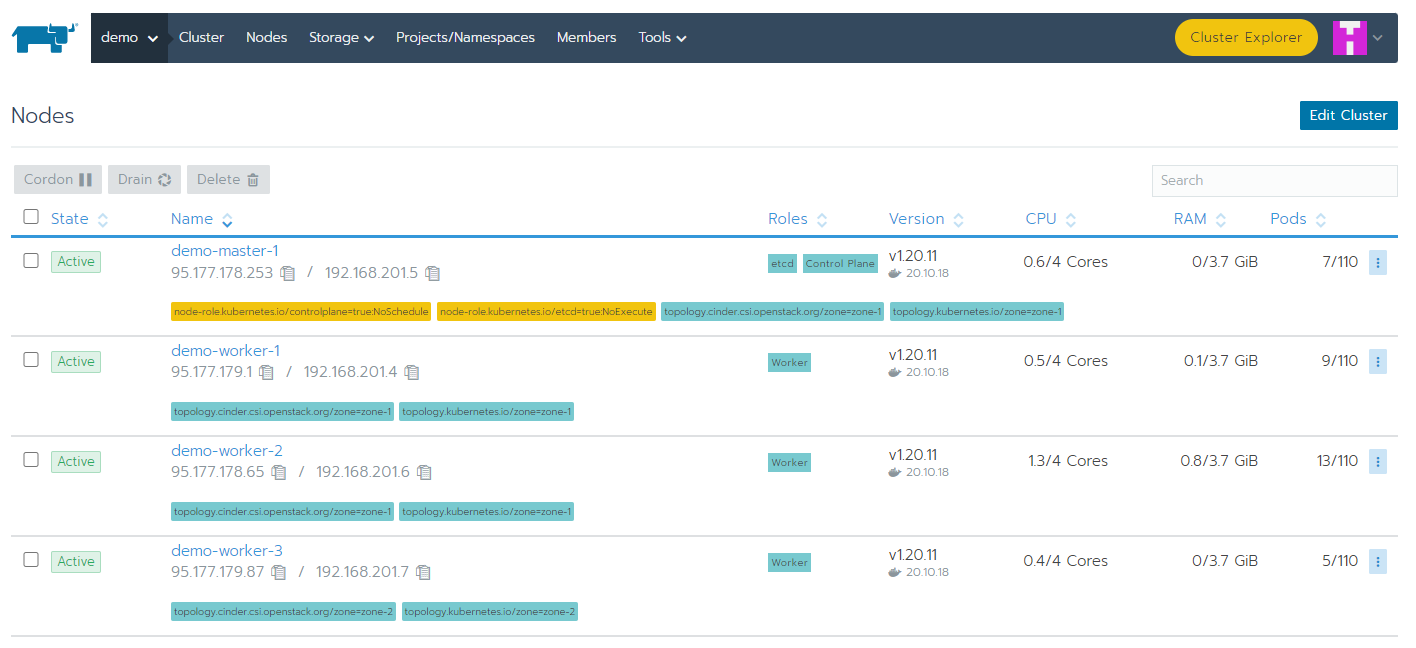

From Rancher UI:

Also, as shown in the nodes tab, All nodes are active and labeled by openstack zones.

Scalability

When it comes to scalability with IaC (infrastructure-as-code), it becomes so easy to obtain any desired state in less consumed time and effort.

All you have to do is to change the number of nodes count_master or count_worker_nodes and run terraform apply again. For example, let’s increase the number of count_worker_nodes by 1.

Edit terraform.tfvars file and increase the count_worker_nodes = "3" as the following:

...

# Nodes server Image ID

rancher_node_image_id = "92f2db82-66c6-463e-8bc8-41f0e48f7402" ## f99bb6f7-658d-4f7c-840d-...

# Count of agent nodes with role master (controlplane,etcd)

count_master = "1"

# Count of agent nodes with role worker

count_worker_nodes = "3"

# Resources will be prefixed with this to avoid clashing names

prefix = "demo"

...

Now navigate to ~/terraform-rancher2/openstack folder and run the following:

terraform apply

A few minutes later, after refreshing states and applying updates:

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Outputs:

rancher_url = [

"https://xx.xx.xx.xx",

]

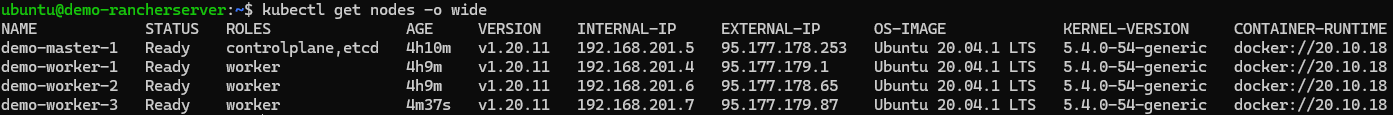

After couple of minutes for the new node to be registered, run the following:

$ kubectl get nodes -o wide

From Rancher UI:

Scaling down the cluster could be made by decreasing the number of nodes in terrafrom.tfvars. Node gets deleted, moreover cloud-provider-openstack detects that and removes it from the cluster.

Cleaning up

To clean up all resources created by this terraform, Just run:

terraform destroy

References